Yesterday I spent a bunch of time with my friend Chris (aka The Treehouse from Wigflip ), who spends a lot of his own time making patches in programs like Max MSP to generate sounds and music. Although he’s good at making all kinds of music, his favorite is definitely ambient music, which requires a lot of precise pitch, timing, and filter adjustments to get right. Since we spent a while doing this yesterday, I thought I’d write it up in case others were trying to get Kinects hooked up to Max using Processing.

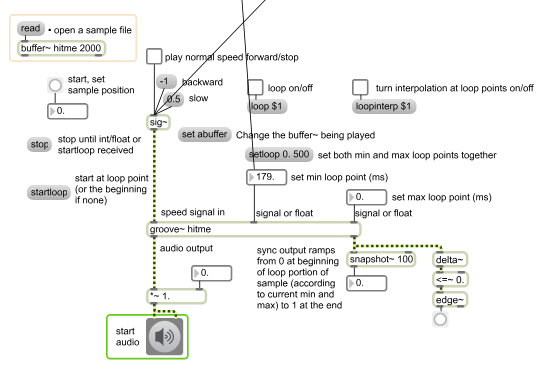

Max, made by San Francisco-based company Cycling 74’, is an interesting programming language that enables this kind of music production — it’s a visual multimedia system that allows people to control the flow of information between different modules. So, instead of writing code using text, blocks are connected together in logical ways and the data can flow between them (so, some module that outputs numbers, such as a controller, can be used to control another parameter, such as the pitch of a music sample). Here’s an example of a sampler in Max:

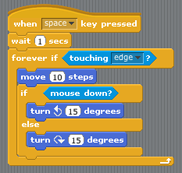

Interestingly, in their modular and visual structure, these kinds of programming languages (other examples inlude Pure Data and VVVV) share some resemblance with Scratch, the programming language designed for children that we use in CS50 as the first problem set, as well as teaching retired alumni the basics of programming:

Max is obviously far more complex and powerful, but the truth is that many aspects of programming can be abstracted a lot further from just the text of code into a visual realm. What we did yesterday was used Max as a “common space” for other applications to interact with it. First, Chris figured out how to control a synthesizer in the program Logic Pro using inputs from Max — but then we thought it would be really sweet if we could hook up a Microsoft Kinect into Max to affect music using just body movements.

The first approach: TUIO

First, we tried to use TUIO to connect the two. TUIO is a protocol that can be used with multitouch devices, such as an iPad, to transfer information about how the surface is currently being manipulated. There exists a library for Kinect called TUIOKinect that generates TUIO signals from the Kinect — each object recognized within the Kinect’s visual field is identified and assigned to a cursor, and the information is sent via the TUIO protocol. So, putting two hands forward towards the camera would generate two cursors, the equivalent of putting two fingers on an iPad surface, which information we could then use.

However, when reading out this information in Max using the TUIO Client, we had some problems, namely the difficulty in ascertaining which cursor was from which object, how to deal with the varying number of cursors, etc. I think that this approach would be good if you are particularly good with data processing in Max, or want to read more about the finer details of TUIO. But for us, we turned to Processing, since I knew we could get much finer-grained control over how the information from Kinect would be handled, and we could pre-process it as we fit.

The second approach: Processing

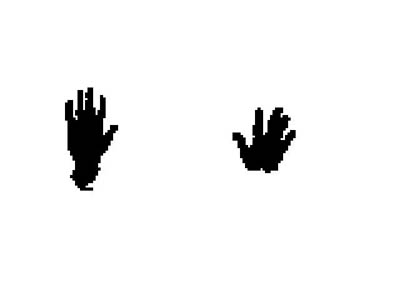

Processing is a great Java-based programming environment that was developed for precisely this kind of multi-media project. There also exists an excellent Kinect library for Processing made by Daniel Shiffman, an assistant professor at NYU Tisch. Using that, it was rather simple to get the Kinect depth image, process it a bit, and then get some useful parameters to send over to Max. Here’s a brief overview of how you can use Processing to get useful information from a Kinect to later pass to Max: Get the depth image, which looks something like this when drawn:

Then, threshold it for a particular depth value. Basically, this defines a plane in the space in front of the camera that will be your “canvas”. You can set these parameters manually, or you can make this adjustable, either by having thresholds adjust on their own until you have the desired number of objects / pixels on the screen, or alternatively use the mouse or keyboard to adjust this when the application runs.

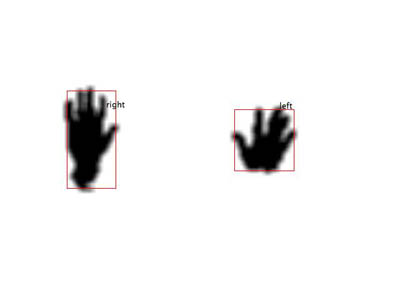

Blur the image (using a fast blur, like Quasimoto’s Superfast blur ). Then, when the image is blurred, perform blob detection. It’s easiest to do this using v3ga’s blobDetection library for Processing. Now, we have objects that we can work with:

From this simple example, there are literally dozens of parameters that we can use to affect sound generation in Max. For example, we can use the x,y coordinates of the detected objects, the distance between them, and angle between multiple objects, the distance from the camera to the object, etc, etc.

Sending OSC data to Max through Maxlink and unpacking it

Now that we’ve generated some interesting numerical values from the Kinect within Processing, it’s time to send it to Max. The easiest way we found to do this is using jklabs’ MaxLink libraries, which send UDP messages between the programs on the local subnet. Basically, UDP is a faster way to send messages because the protocol doesn’t actually confirm whether they were recieved (versus the TCP protocol that is used across the internet to ensure reliable delivery, at the cost of increased overhead). But since we are running processing and Max on the same computer, UDP is completely fine since packets won’t get lost.

From processing, the Maxlink is created like this:

// The link to Max

MaxLink link = new MaxLink(this, "kinect");

// The link to Max

MaxLink link = new MaxLink(this, "kinect");And messages can be sent out like this once we’ve done our calculations in Processing — Notice how we are separating the different values we want to pass using spaces.

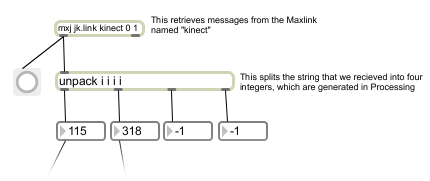

link.output(x1 + " " + y1 + " " + x2 + " " + y2);Then in Max, the messages can be unpacked in the same order that they were constructed:

As you can see, the order in which the messages are sent in processing is the order in which they come out in Max. We found it useful to have the values sent default to -1 if there wasn’t useful information (for example if there was only one object in the scene) so that this could be caught in Max. Then, these numbers can be used to control delay, pitch, etc. We played around with using a simple sampler and altering the parameters there.

The result: interactive sound!

Body music: Connecting Kinect to Max with processing from Filip Zembowicz on Vimeo.

This is the first thing we made with this technique - have fun with this!